23 Oct 2024

The first investigation of loopback performance focused on the randomness of the RTT measurements on loopback, and the fix for BBR was to not make congestion control decisions based on delays if the RTT were small. After that, the results “looked good”, although there was quite a bit of variability in the results. The tests were made by sending 1GB of data. Looking at traces, it seemed that many connections were starting to slow down at the end of the 1GB transfer. I thus repeated the experiments, this time sending 10GB of data instead of 1GB. That was quite instructive: the data rate of a Cubic connection dropped by a factor 2!

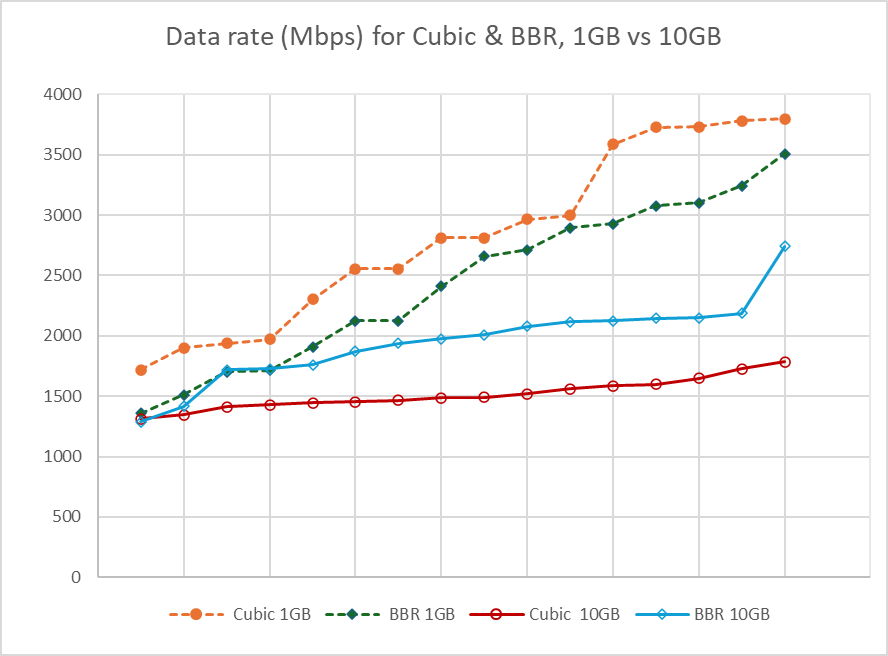

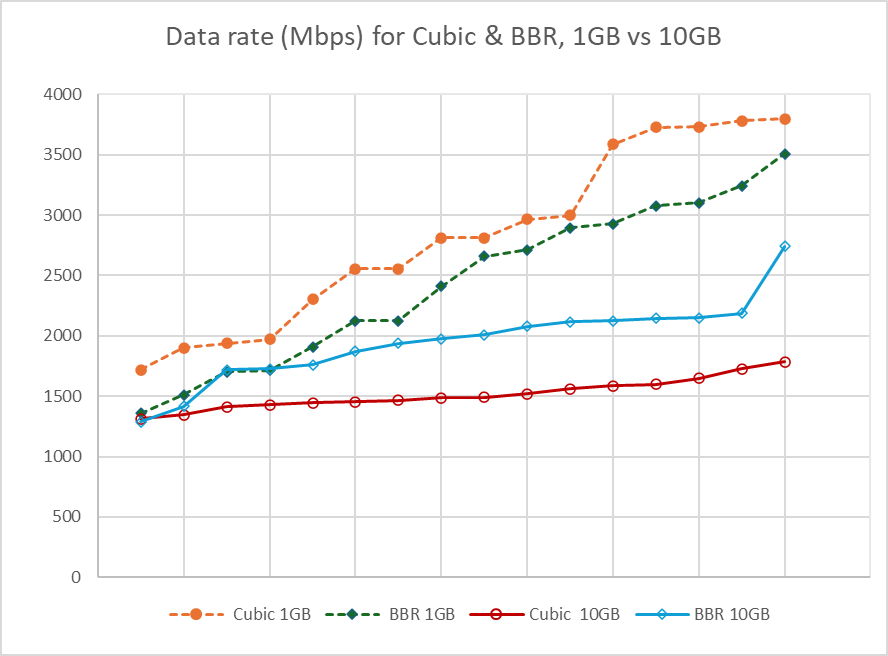

The figure 1 shows the result. Each of the lines correspond to 16 connections: downloading either 1GB or 10GB between a picoquic client and a picoquic server on my laptop (Dell XPS 16, Intel(R) Core(TM) Ultra 9 185H 2.50 GHz, Windows 11 23H2) for each of BBR and Cubic, using the PERF application protocol. The Cubic line for 10 GB transfers shows data rates varying from 1.3 to 1.8 Gbps, a stark contrast with the 1,7 to 3.5 Gbps observed on the 1GB downloads.

Checking the statistics for the various connections did not show any excessive rate of packet retransmission, but it did show that the client sent only about 50,000 acknowledgements per connection, which translates to an acknowledgement every 1.1ms. That does not compare well with the average RTT of 65 microseconds. These long intervals between acknowledgements could be traced to the “ACK Frequency” implementation: the value of the “Min ACK delay” was set to 1ms, and the value of the “max gap” was set to 1/4th of the congestion window. Detailed traces could show that this congestion window was initially quite large, which explained why ACKs were so infrequent. Infrequent ACKs means that the Cubic code was not getting feedback quickly enough, and reacted by slowing down over time.

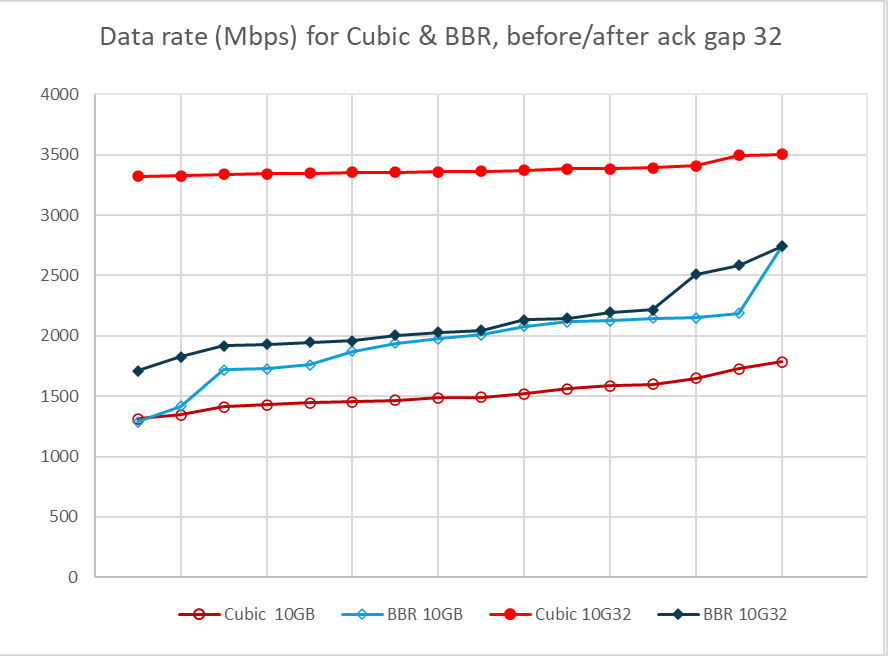

I tried a series of more or less complex fixes, but the simplest and most effective one was to cap the ack gap value to 32 if the minimum RTT was smaller than 4 times the ACK delay. As you can see in figure 2, the effect on the Cubic performance was drastic. The average data rate jumped to 3.4 Gbps, and the variability was much reduced, from 3.3 to 3.5 Gbps. The effect on BBR was also positive, but less marked.

An ACK gap of 32 corresponds to about 100 microseconds between ACK in the Cubic trials. This is still much larger than the min RTT, but close to the average smoothed RTT. Trying to get more frequent acknowledgements was counter productive, probably because the processing of more acknowledgements increased the CPU overhead. Trying higher values like 64 was also counterproductive, because Cubic would slow down the connection.

This experiment and the previous one outlined that “very high bandwidth very low latency” connections are a special case. The code is close to saturating the CPU, which forces a reduction in the ACK rate, with the average delay between acknowledgements often larger than the actual min. The variations of delay measurement for an application level transport are quite high, which forces us to not use delay measurements to detect queues. The main control variable is then the rate of packet losses, which ended up between 0.4 and 0.5% in the experiments.

It would be nice if the interface could implement ECN markings, so congestion control could react to increased queues before losing packets. Failing that, it might make sense to monitor the “bottleneck data rate”, not necessarily to deduce a pacing rate like BBR does, but at least to stop increasing the congestion window if the data rate is not increasing. But that’s for further research.

If you want to start or join a discussion on this post, the simplest way is to send a toot on the Fediverse/Mastodon to @huitema@social.secret-wg.org.