13 Oct 2024

I did a quick experiment last week, measuring performance of picoquic on a loopback interface. The goal was to look at software performance, but I wanted to tease out how much of the performance depends on CPU consumption and how much depends on control, in particular congestion control. I compared connections using Cubic and my implementation of BBRv3. I observed that BBRv3 was significantly slower than Cubic. The investigation pointed out the dependency of BBRv3 on RTT measurements, which in application level implementations contain a lot of jitter caused by process scheduling during calls to OS APIs. This blog presents the problem, the analysis, the first fixes, and the open question of bandwidth measurement in low latency networks.

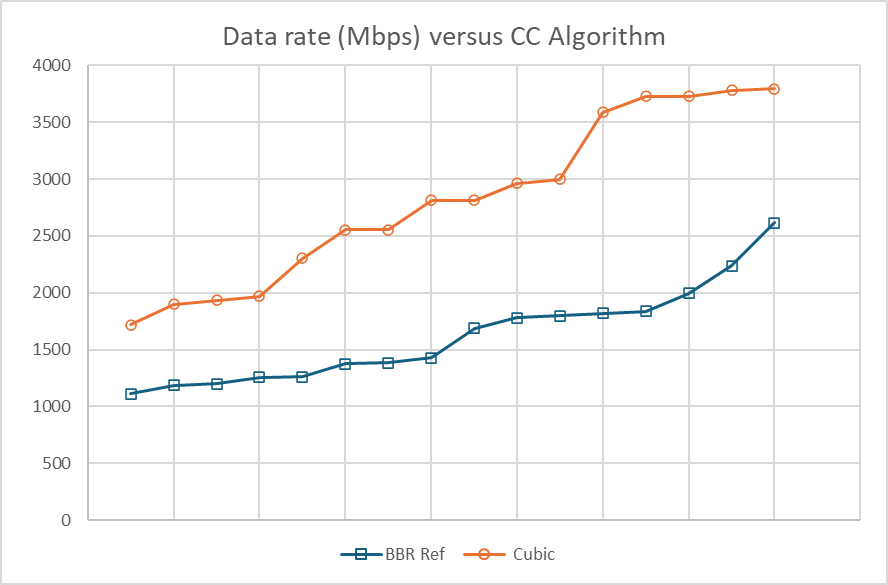

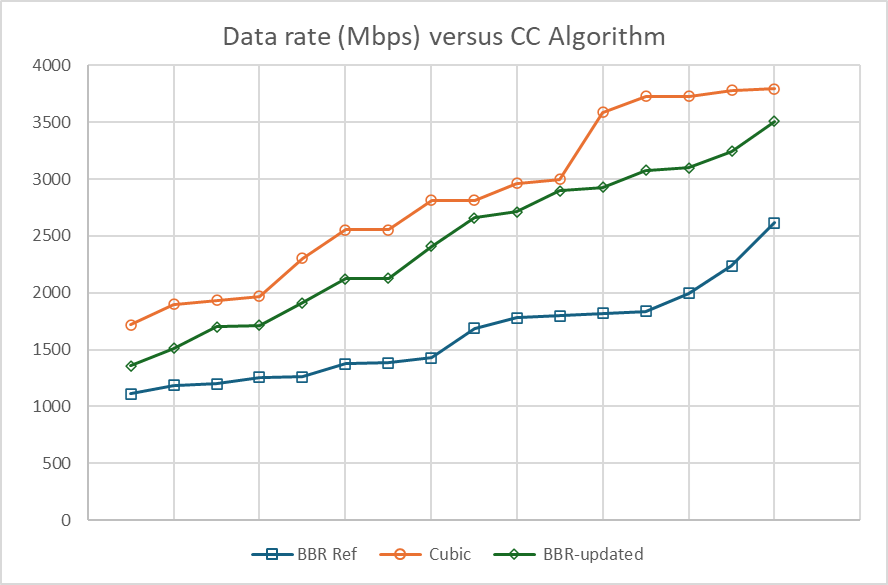

The result of the loopback tests are displayed in figure 1. I ran 16 QUIC connections between a picoquic client and a picoquic server on my laptop (Dell XPS 16, Intel(R) Core(TM) Ultra 9 185H 2.50 GHz, Windows 11 23H2) for each of BBR and Cubic, downloading 1GB from the server using the PERF application protocol. The figure shows the observed values of the data rate in Mbps, 16 numbers for each congestion control protocol, organized for each protocol in a line from lowest to highest. The first tests showed that BBR is markedly slower, averaging 1.7Gbps versus 3 Gbps for Cubic. The BBR data rates are much more variable, between 1.1 and 2.4 Gbps, versus between 2.6 and 3.8 Gbps for Cubic.

To analyze the results, I had to add “in memory” logging to the picoquic stack. The current logging writes on disk, which impacts performance, and thus cannot easily be used to understand performance at high speed. I instrumented the code to log a bunch of variables in a large table in memory. That requires allocating a couple 100MB at program start for logging one connection, so it is not something that should be done in production. But it does work on single connections, and the impact on perf is OK. I dug into 2 connections: one using Cubic, getting 3Gbps on loopback on Windows; another using BBR and getting 2Gbps.

The Cubic connection is easy to understand: Cubic basically goes out of the way. The CWND is always much larger than the bytes in transit, the pacing rate is very high. The sender ends up CPU bound. On the other hand, the analysis of the BBR logs showed that the sending rate was clearly limited by the pacing rate set by BBR.

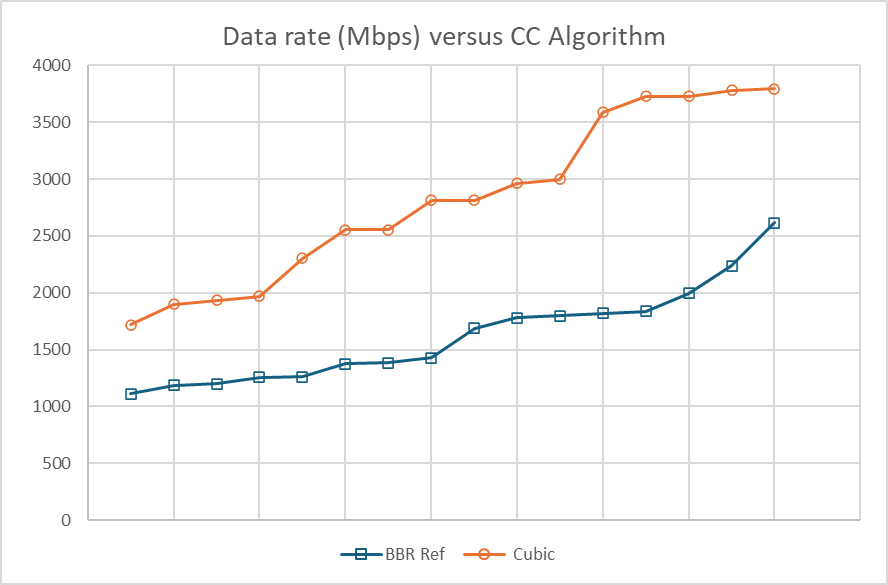

The figure 2 shows the evolution of the pacing rate (dotted orange line) and the measured throughput (dark green line) over 4 seconds of connection. The figure also shows the RTT measurements (downsampled from 700000 to about 20000) in microseconds at the bottom of the graph, and the evolution of the BBR internal state as a light blue line. The graph shows that throughput is clearly limited by the pacing rate, but that the pacing rate varies widely. This was surprising, because BBR v3 normally alternates between probing phases and long periods in “cruise” state. The graph also shows the variations of the RTT, with RTT spikes present at about the same times as the transitions in the pacing rate.

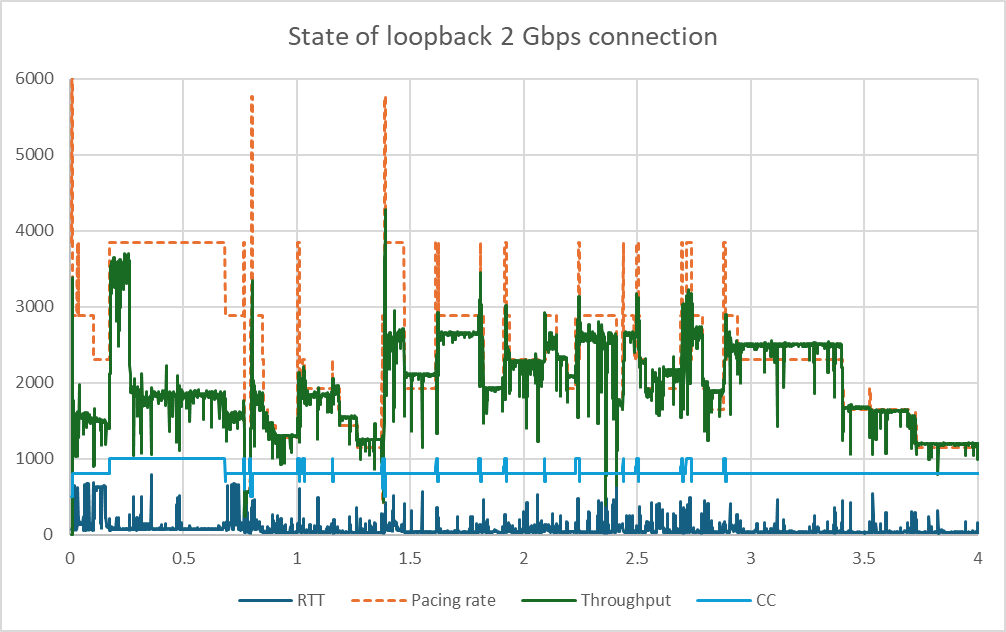

The figure 3 displays an histogram of the RTT samples throughout the connection. The values are widely dispersed, from a minimum of 20 microseconds to outliers up to 1400 microseconds. Congestion controls based on delay measurements assume that increases in delay indicate queues building up in the network, and should be taken as a signal to slow down the connection. However, the graph on figure 2 does not show a clear correlation between sending rate and queue buildup.

Picoquic runs in the application context. Receiving and acknowledgement requires waiting for an event until the asynchronous receive call is complete, and then receiving the data. Previous measurements show that the delay between raising an event on a thread and return from waiting on another is usually very short, maybe a few microseconds, but can sometime be delays by tens of microseconds, or maybe hundreds. These delays will happen randomly. If the path capacity is a few hundred megabits or less, these random delays are much lower than the delays caused by transmission queues. But in our case, these delays can have a nasty effect.

The BBRv3 implementation in picoquic includes improvements to avoid queue buildups and maintain the responsiveness of real time application, see this draft. Two of the proposed improvements are “exiting startup early if the delays increase” and “exiting the probeBW UP state early if the delay increases”. This works quite well on most long distance Internet connection, but in our case, they become “exit the probe state randomly because the measure of delay increased randomly”. Not good.

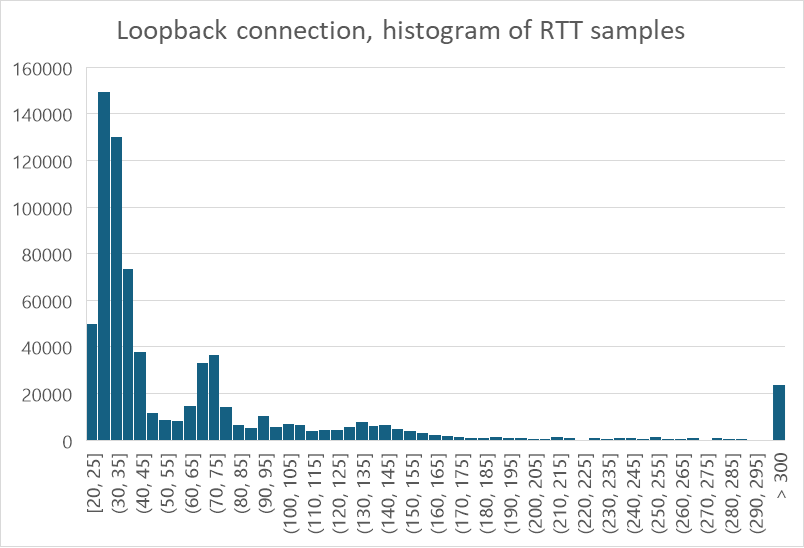

The solution was to disable these two “delay based” tests in BBR if the minimum delay of the path is very short. As a first test, we define “very short” as “less than 128 microseconds”. We may have to review that value in the future, but figure 4 shows that disabling the two tests results in a marked improvement. Figure 4 is identical to figure 1, except for an extra line showing 16 tests with our improved version of BBR. This version achieves data rates between 1.4 and 3.5 Gbps, and is on average 50% faster than the unmodified version. Lots of results for changing just two lines of code, but as usual the problem is to find out which lines…

The results are improved, but BBR is still about 15% slower than Cubic in this scenario. I suspect that this is because the randomness in delay measurements also causes randomness in the bandwidth measurements, and that this randomness somehow causes slow-downs. In figure 2, we see periods in which BBR is in “cruise” state, yet the pacing rate suddenly drops. This could be caused by BBR receiving an abnormally slow measurement and dropping the pacing rate accordingly. The next bandwidth measurements are limited by that new pacing rate, so the rate has effectively “drifted down”. Another problem that we will need to investigate…

If you want to start or join a discussion on this post, the simplest way is to send a toot on the Fediverse/Mastodon to @huitema@social.secret-wg.org.