12 Jan 2019

Maybe you have heard of QUIC, a new transport protocol that combines functionalities of TCP and TLS, plus some new features like parallel handling of streams and latency minimization through 0-RTT. The work started at Google 5 years ago, as part of their effort to speed up the web. The IETF picked up the early work from Google, and is working through its standardization. There is a great deal of innovation happening there, and it is made possible by a series of design choices, such as running over UDP to cross NAT and firewalls, complete encryption of the transport headers to prevent interference from the middle of the network, and implementation in user space. It is all very cool, but there is a potential danger. Implementations can innovate and deploy new congestion control algorithms pretty much at will. That could trigger a race to the bottom, and potentially break the Internet, unless network providers start deploying adequate Active Queue Management algorithms.

Before 1988, the Internet was occasionally victim of congestion events. I was working at INRIA in France at the time, and some links in our early TCP-IP network were quite slow, X.25 virtual circuits that maxed out at 9600 bps. It worked some of the time, and then there would be a congestion event. Some node would start sending too fast, queues would build up, delays would increase and trigger retransmissions which would contribute to more queues, and very soon nothing useful could be done. This was eventually fixed in 1988, with the work of Lixia Zhang on adaptive timers and of Van Jacobson on congestion control algorithms – the algorithm that came to be known as "TCP Reno".

The Internet grew a lot since 1988. The link speed quickly reached megabits per second, and then gigabits. Some people may complain that their connection is too slow, but we have not experienced anything like the congestion collapses of the early era. The traditional stance is that the Internet only remained stable because most of the traffic uses TCP and uses congestion control algorithms designed to back-off in the presence of congestion. The design of the TCP stacks tends to be very conservative. There were some updates in the 90's to accommodate larger transmission speeds and more efficient retransmissions, but the congestion control logic remained very much the same. The only major change happened in the Linux kernel in 2006, with the replacement of "New Reno" by "Cubic" – 18 years after Van Jacobson's design. Cubic does in fact keep a lot of the design principles of Reno, probing slowly for increased capacity and backing off promptly in case of congestion. This slow rate of change might soon change.

Change was slow in large part because the TCP stacks were shipped as part of the operating systems, and there never were very many operating systems. The operating systems developers are by nature conservative. If you are working on Windows, Linux or FreeBSD, you don't want to ship a code update that might cause Internet congestion. Anything you do will have to pass multiple reviews, and deviation from standards would require a lot of justification. In fact, that conservative stance was pretty much one of the motivations for developing QUIC as an "application level" protocol. It runs over UDP, and the code is shipped as a library, compiled with the application. Google does not have to wait that Microsoft updates the TCP stack in Windows: it can ship a new version of the QUIC library in a update to the Chrome browser. Instead of getting an operating system update every year or so, the application can be updated every month, maybe even every day. The operating system developers acted as gatekeepers of transport innovation. Shipping the code with application bypasses these gatekeepers.

On one hand, removing the gatekeepers has immediate benefits, because enables development of new transport features. QUIC can for example deploy 0-RTT transmission, so that the first web request can be sent from client to server without waiting for complete establishment of the connection. It mitigates head of queue blocking by managing simultaneous transmission of multiple streams. Future updates may bring partial reliability or forward error correction. This increased deployment agility is most welcome. On the other hand, that same agility enables immediate deployment of "alternative" congestion control algorithms. Some of that may be good, such as designing algorithms that react better than New Reno or Cubic to the specific behavior of wireless links. But there is a risk that developers will abuse this newfound agility and engage in a race towards ever more aggressive behaviors. If everybody tunes their algorithms to be a little faster than the competition, the net result could well be a return of congestion collapses, just like we saw in the pre-1988 Internet.

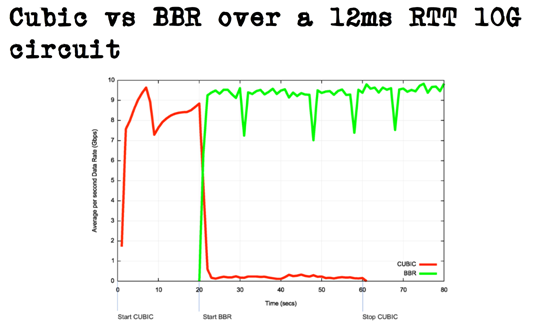

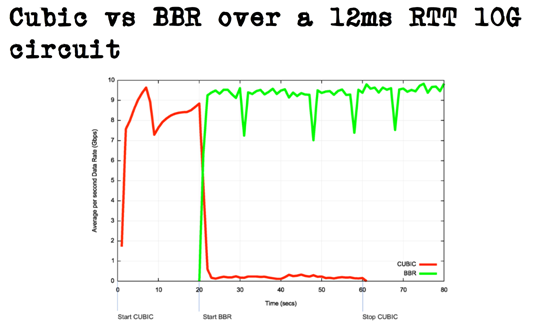

In fact, Google already started shipping QUIC with a new congestion control algorithm called BBR. BBR operates by tracking the end to end delay and trying to maintain is small, backing off when an increase in sending rate creates a disproportionate increase in queuing delay. It does deliver high bandwidth and low latency, which is great. But it also delivers something else: domination over the competitors. The graph above is taken from a presentation by Geoff Huston at the RIPE 76 conference. It shows what happens when a 10 Gbps link is shared between two connections, one managed using the Cubic algorithm that ships with Linux and Windows, and the other with BBR. In Geoff's words, "that was brutal": very soon after the BBR connection starts, the throughput of the Cubic connection drops to almost zero. I don't have actual measurements, but it may well be that if two users are trying to access the web from the same home, the one accessing Google servers using Chrome will get most of bandwidth, while the other accessing some other server will be left with a very slow connection.

Let's be clear and not rush to assign bad intentions to my colleagues at Google. There is no proof that the dramatic effect shown in Geoff's slide was deliberate. It may well be the unintended side effect of the design priority, achieving low latency. It may well be that the design will be fixed to achieve better behavior, since after all the code can be readily updated. Geoff Huston's detailed analysis of BBR shows that there are indeed some efforts in that direction. But still, the optics are not good.

Faced with results like that, the competitors are going to react. They cannot really accept to be pushed to a slow lane. The most expedient development could be to just use QUIC, since the IETF standardization has well progressed, and of course ship it with their own copy of BBR. But there is the latent temptation to do even better, to "move to an even faster lane". Maybe they will just change a couple of parameter values in the BBR algorithm to make it "faster". Maybe they will invent their very own congestion control algorithm. Remember, there are no gatekeepers any more. If you can ship the same software to your app and to your servers, you can compete.

That's not an entirely new problem. Some applications have been accused to do that for a long time. For example, some video conference applications are said to send video just as fast as they could, because they are "real-time". (In fact, congestion control for video conferences was demonstrated in the early 90's.) But having wide spread deployment of new congestion control algorithms changes the game. In fact, it exposes a gap in the current Internet architecture. The gatekeepers in the operating systems pretty much guaranteed that network managers could rely on end-to-end congestion control to guarantee stability. Well, not anymore.

The solution is probably a widespread deployment of Active Queue Management. There are many AQM algorithms. Random Early Detection and Explicit Congestion Notification were proposed years ago. More recent algorithms were developed in the IETF AQM Working Group to address the "buffer bloat" problem, such as FQ-CODEL or PIE. My hope is that we will see a virtuous cycle: AQM algorithms get deployed at bottlenecks and enforce that each class of traffic gets a fair share. If network providers do that, there will be much less incentive to deploy "unfair" congestion control algorithms in applications. On the contrary, the algorithms will evolve to stay within the allocated fair share, while keeping queues as short as possible. That would be much better than collapsing the Internet!