05 Jul 2022

Many network applications work better with low latency: not only video conference, but also video games and many more transaction-based applications. The usual answer is to implement some form of QoS control in the network, managing “real-time” applications as a class apart from bulk transfers. But QoS is a special service, often billed as an extra, requiring extra management, and relying on the cooperation of all network providers on the path. That has proven very hard to deploy. What if we adopted a completely different approach, in which all applications benefited from lower latency? That basically the promise of the L4S architecture, which has two components: a simple Active Queue Management implemented in network routers provides feedback to the applications through Early Congestion Notification marks, and an end-to-end congestion control named “Prague” uses these marks to regulate network transmissions and avoid building queues. The goal is to obtain Low Latency and Low Losses, the 4 L in L4S – the final S stands for “Scalable Throughput”.

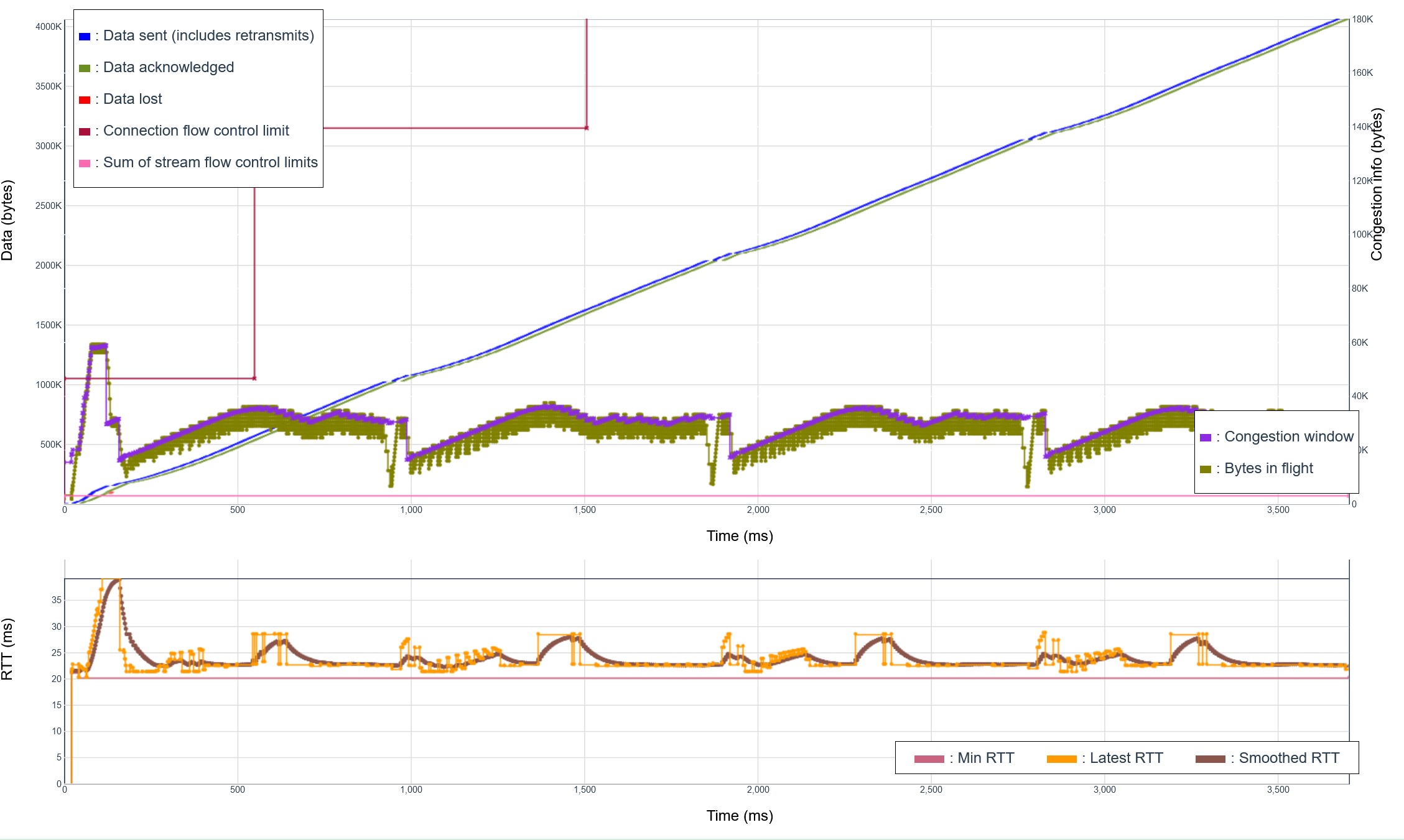

I am developing an implementation of the Prague algorithm for QUIC in Picoquic, updating an early implementation by Quentin Deconinck (see this PR). I am testing the implementation using network simulations. The first test simulates using L4S when controlling the transfer of 4 successive 1MB files on a 10 Mbps connection, and the following QLOG visualization shows that after the initial "slow start", the network traffic is indeed well controlled:

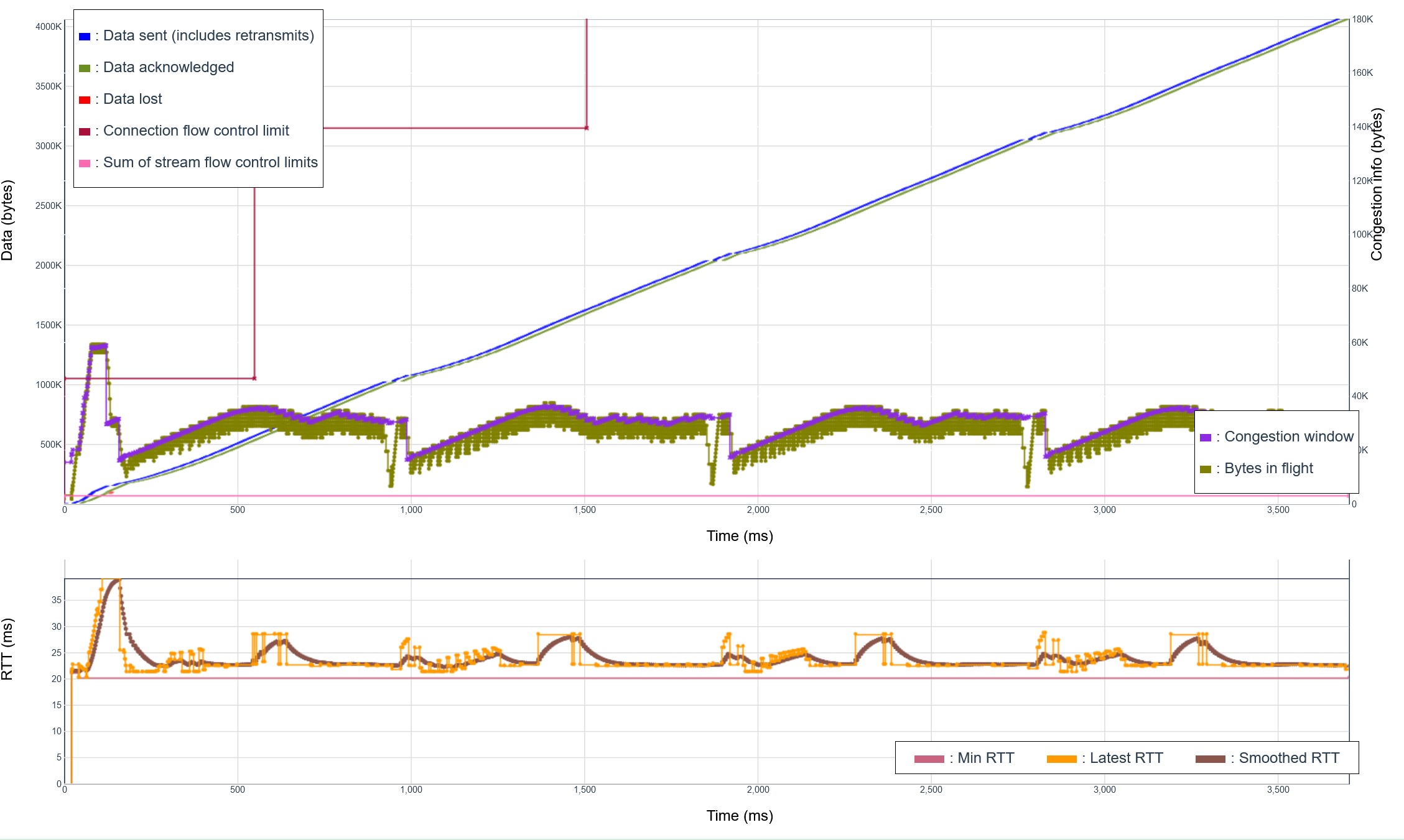

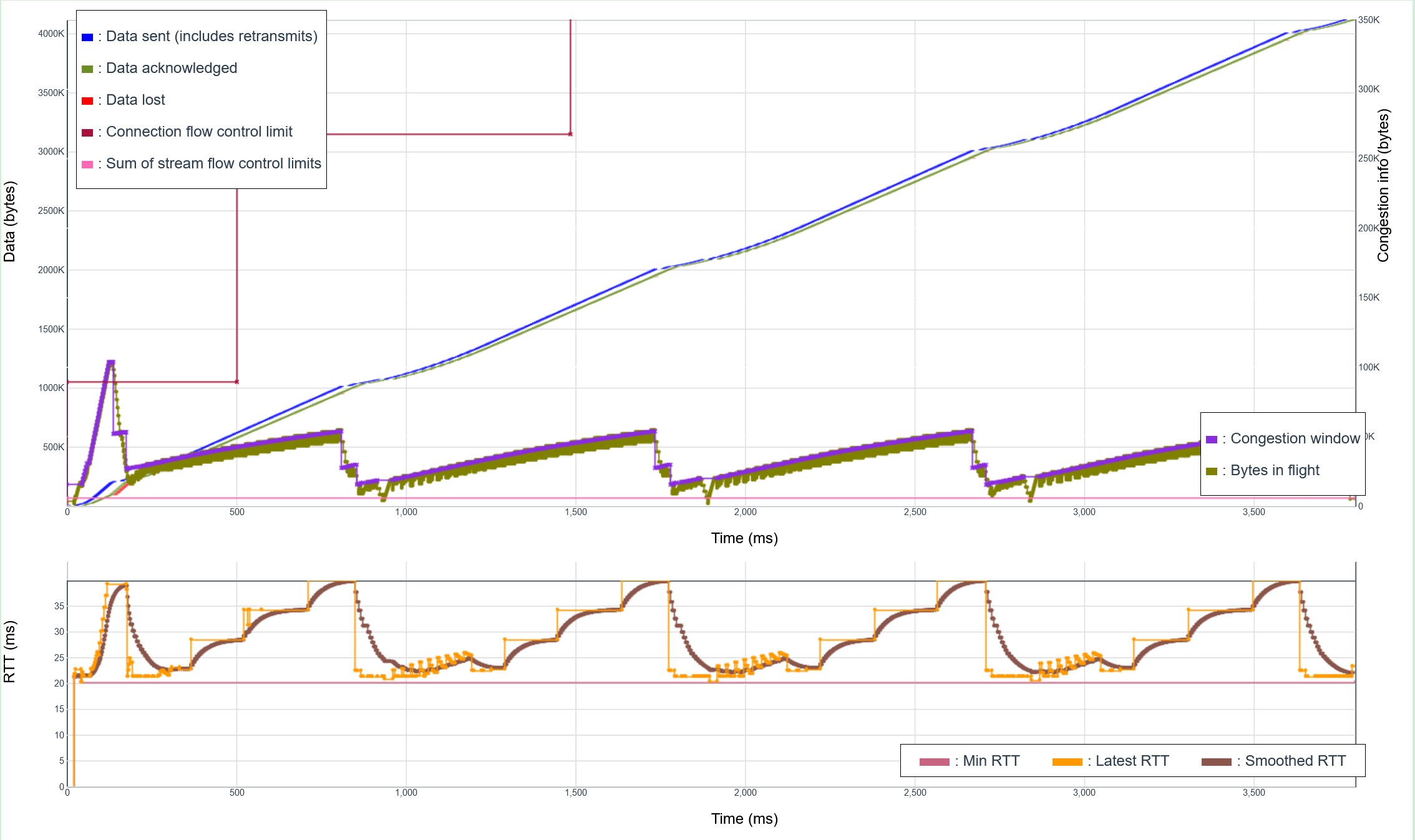

For comparison, using the classic New Reno algorithm on the same simulated network shows a very different behavior:

The Reno graph shows the characteristic saw-tooth pattern, with the latency climbing as the buffers fill up, until some packets are drop and the window size is reset. In contrast, the Prague shows a much smoother behavior. It is also more efficient:

The implementation effort did find some issues with the present specification: the Prague specification requires smoothing the ECN feedback over several round trips, but the reaction to congestion onset is then too slow; the redundancy between early ECN feedback detecting congestion and packet losses caused by the congestion needs to be managed; something strange is happening when traffic resumes from an idle period; and, more effort should be applied to managing the start-up phase. I expect that these will be fixed as the specification progresses in the IETF. In any case, these numbers are quite encouraging, showing that L4S could indeed deliver on the promise of low loss and low latency, without causing a performance penalty.

Other protocols, like BBR, also deliver low latency without requiring network changes, but there are limits, such as still suffering congestion caused by applications using Cubic, and slow reaction to underlying network changes. BBR is worse than Prague in that respect but performs better on networks that do not yet support L4S, or on high-speed networks. Ideally, we should improve BBR by incorporating the L4S mechanisms. With that, we could evolve the Internet to always deliver low latency, without having to bother with the complexity of managing QoS. That would be great!

Edited, a few hours later:

It turns out that the "strange [thing] happening when traffic resumes from an idle period" was due to an interaction between the packet pacing implementation in Picoquic and the L4S queue management. Pacing is implemented as a leaky bucket, with the rate set to the data rate computed by congestion control and the bucket size set large enough to send small batches of packets. When the source stops sending for a while, the bucket fills up. When it starts sending again, the full bucket allows for quickly sending this batch of packets. After that, pacing kicks in. This builds up a small queue, which is generally not a big issue. But if the pacing rate is larger than the line rate, even by a tiny bit, the queue does not drain, and pretty much all packets get marked as "congestion experienced."

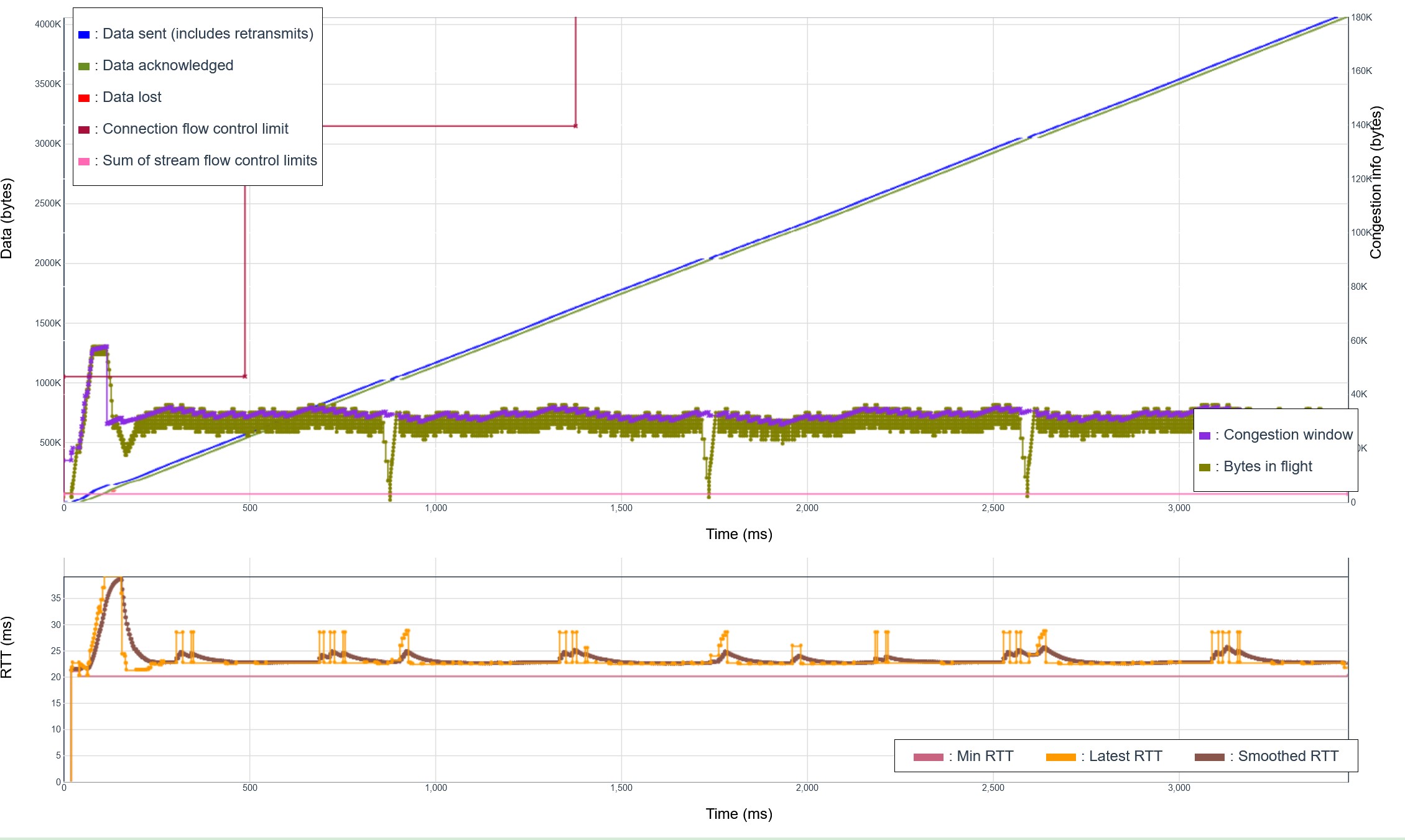

This was fix by doing a test on the time needed to send a full window of packets. If the sender learns that a lot of packets were marked on a link that was not congested before, and that the "epoch" lasted longer than the RTT, then it classifies the measurement as "suspect" and only slows down transmission a little. With that, the transmission becomes even smoother, and the transfer time in the test drops to less than 3.5 seconds, as seen in this updated visualization:

If you want to start or join a discussion on this post, the simplest way is to send a toot on the Fediverse/Mastodon to @huitema@social.secret-wg.org.