22 Sep 2020

Sometimes, one looks at a bug list and find one that seems easy enough. I just did that two days ago, looking at issue #1023 in the Picoquic project, “Simulate DDOS by repeated Client-Hello”. I entered that issue four weeks ago, when doing a review of possible improvements. I had some free time, it seemed easy, so I just started the work. Guess what, this turned out to be a vivid illustration of the classic software development principle: if it is not tested, it probably does not work.

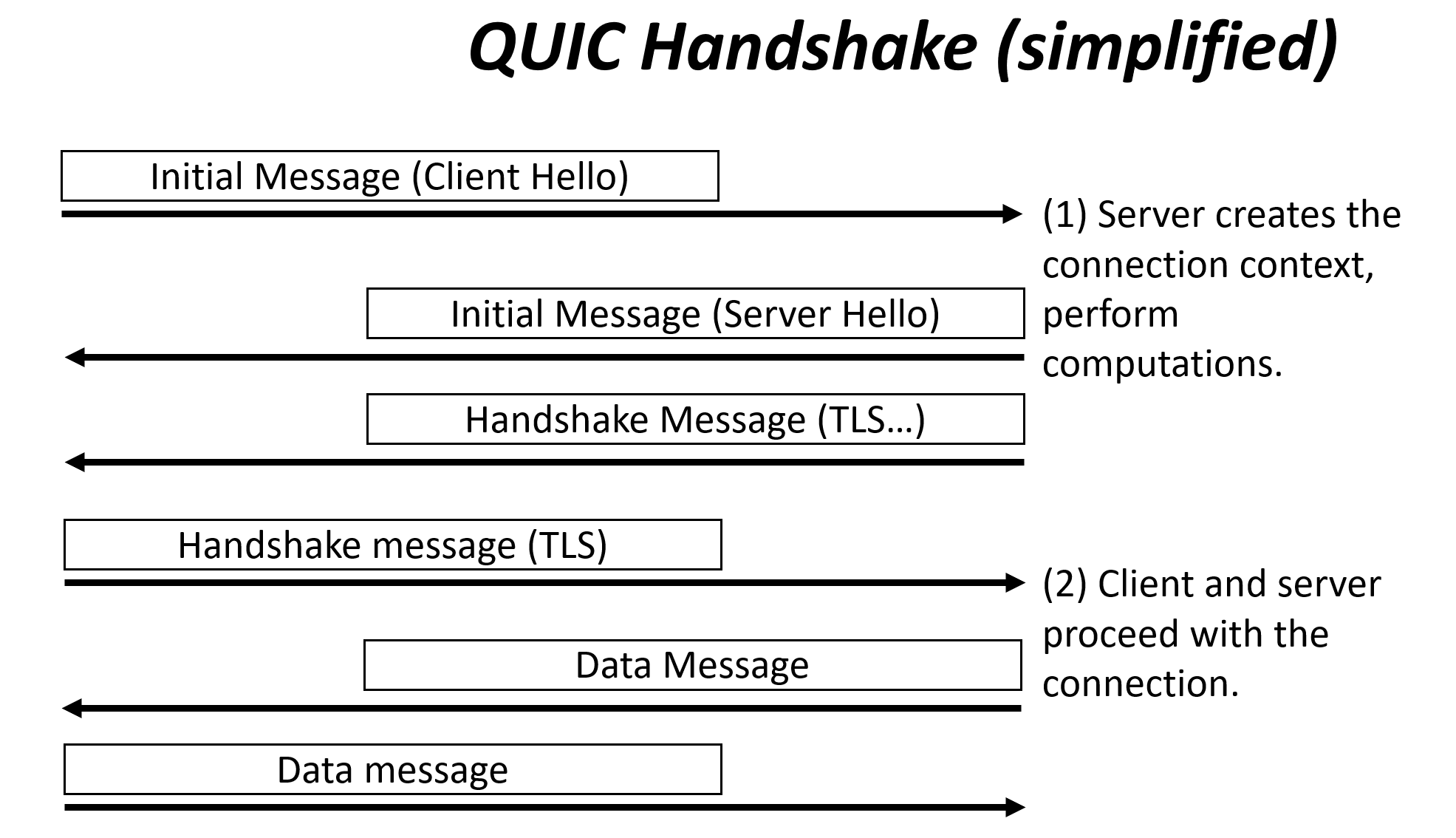

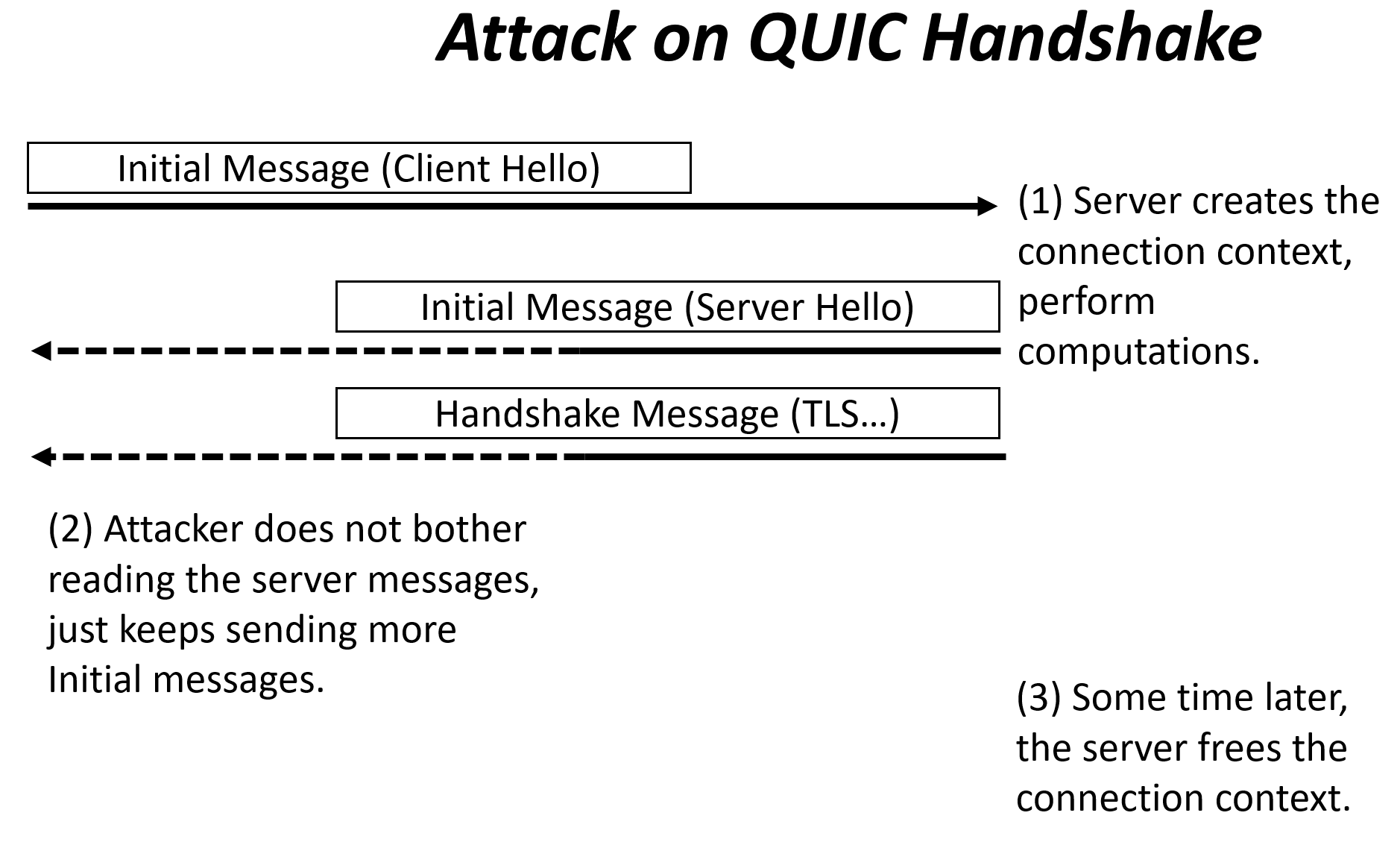

The working group knows since the beginning of the QUIC project that QUIC servers can be the target of Distributed Denial of Service (DDOS) attacks. QUIC runs over UDP. The first messages of a QUIC session carry a TLS handshake between client and server. The handshake involves a lot of cryptographic computations. A botnet could manufacture vast quantities of client handshake messages, apparently coming to a variety of IP addresses. The server would attempt to execute as many sets of cryptographic computations, in the process exhausting its CPU resources and becoming unable to service real customers. That’s why the QUIC transport draft specifies a “Retry” process, in many ways similar to the SYN Cookies of TCP, but servers only use that when they anticipate attacks. Otherwise, they just use the standard QUIC handshake, pictured in the QUIC Handshake figure below.

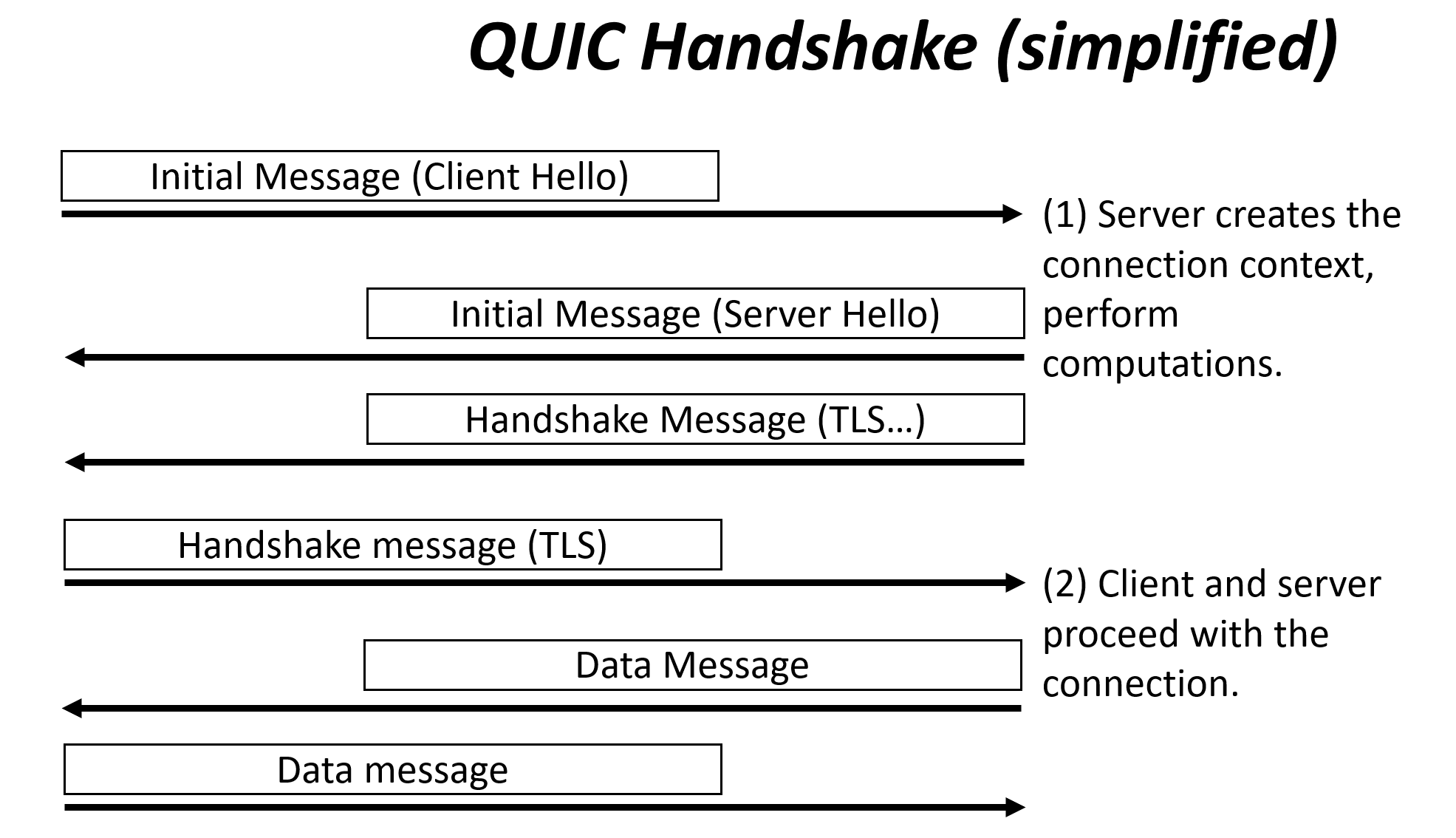

Picoquic does support the Retry process, and has supported it for a long time, but it was a startup option. I wanted to check what happens if a server that did not instantiate that option was the target of an attack. I wrote a small simulation, in which the simulated attacker first built a long series of “initial” messages and then sent them to the server, as shown below in the Attack figure.

The simulation was running in simulated time, but I was measuring also the “real” time needed by the code to process that series of messages. The code had been stressed before, but this was the first try of a full scale DDOS attack and as with any first time I expected there might be a few glitches. There were. In the first run, the test console filled up with a lot of debug traces, complaining that connections could not be logged. In simulated time, the attack was sending 10,000 messages in one second, but logging all these debug messages took a minute and a half. Not good. Even with debugging messages turned off, there was still an issue with Picoquic unable to create enough logging files. In hindsight, the reason is obvious: there is a limit to how many files a given process can open.

The attack was creating many connection contexts, each opening a file. Lesson learned, Picoquic now as limit on the number of debug files open simultaneously. That’s not a problem in normal operations, when applications can choose to have detailed logs of each connection. During an attack, thee will be fewer logs but then one only needs to look at a few of them to understand what is going on.

Solving this logging issue did improve performance quite a bit. The simulation of 10,000 DDOS messages over 1 second lasted 11 seconds. That was a bit slow, but the interesting point is that the CPU time was not dominated as expected by the crypto operations. Instead, a fair bit of time appeared spent in the hash tables. That turned out to be a test issue. The default simulation set up configures the server with small hash tables, sufficient for the few connections in most other simulation scenarios. But here we needed to handle large number of connections, and we were seeing lots of hash collisions. Fixing the setup, using larger hash tables, and the code was handling 10,000 connections attempts in a bit more than 8 seconds, about 1200 connections per second. More importantly, the CPU cost was completely dominated by crypto operations.

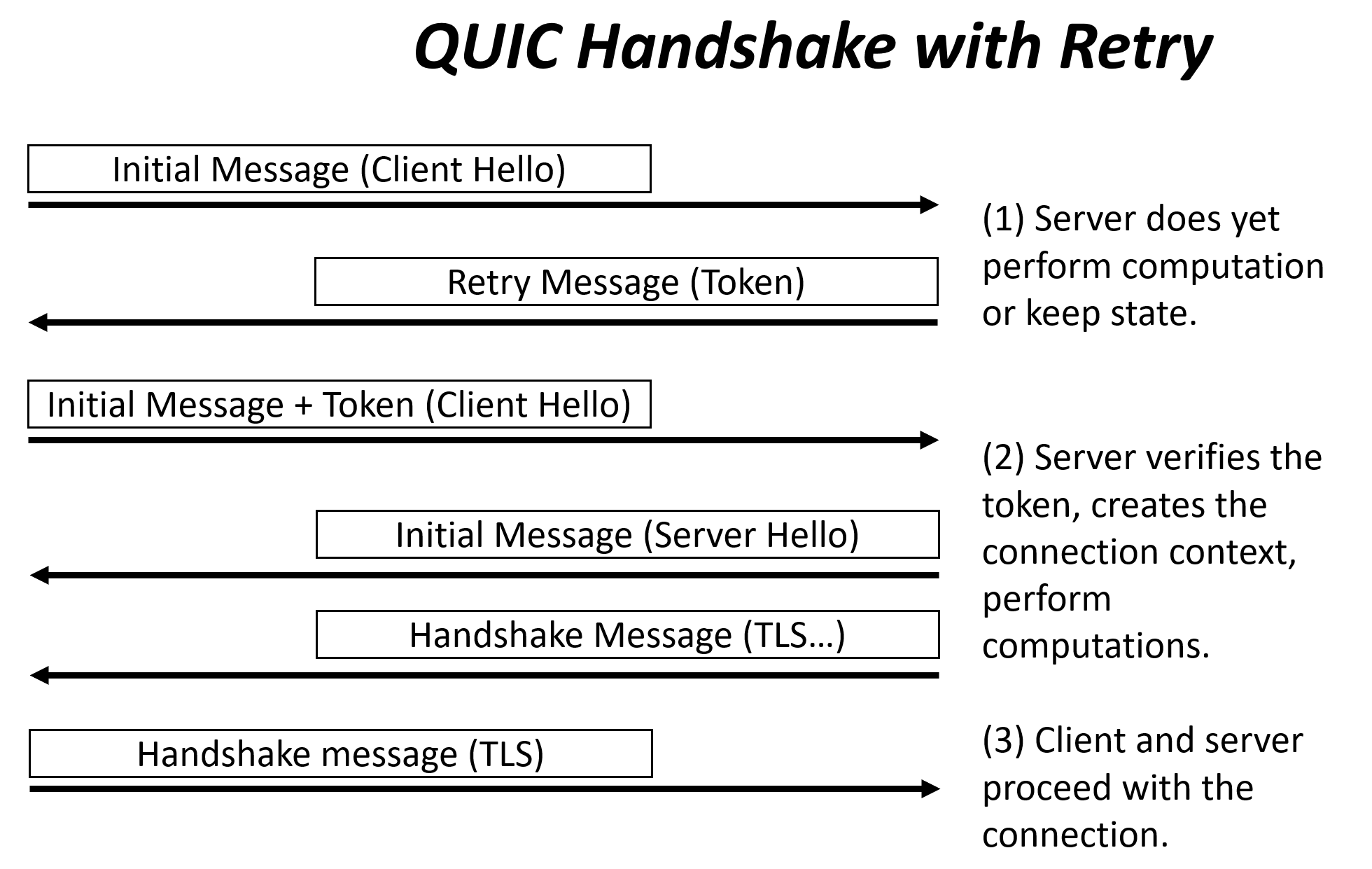

Handling 1200 connections per second is good, but attackers can forge messages much faster than that. Servers need to be able to turn on the “Retry” defense automatically. As shown on the Retry picture above, the server will respond to an Initial message by a retry request. If the client can respond with a copy of the retry token, the server allocates resources and perform computation. But if an attacker just sends an Initial message and does not wait for the response, the server has expanded very little resource. An attacker that use forged IP addresses will not receive the retry tokens, and thus will not be able to consume significant resource on the server.

To automate the defense, I programmed Picoquic to keep track of the number of “half open” connections, and then triggering the retry process automatically if that number exceeds a threshold. This worked just as expected. The 10000 messages were absorbed in 500 milliseconds, better than real time. But of course, another issue appeared during when I ran the full test suite: the HTTP stress test started to fail.

The HTTP stress test verifies that a server can handle many quasi simultaneous HTTP connections and requests. The test reported that one of these connections was failing. Analysis showed that connection arriving when the number of half open connections was larger than the threshold, receiving a retry request, responding as expect, but the reply arriving when the number of connections was below the threshold. There was a bug in the server code, and it did not correctly process the retry token in that case. So I fixed that, and in the process of fixing that I also fixed another issue and ended up updating two test sequences.

In conclusion, this “simple” DDOS issue required a bit more work than the typical bug fix. Bugs lurk in unsuspected places, such as the configuration of logging or the dimensioning of hash tables. Fixing that could only be done safely because the Picoquic test suite is fairly extensive. But fixing bugs is fun. here is probably still more work to do to further reduce the amount of resource consumed when generating the retry messages but with just these fixes the server is more robust in the face of attacks.