21 Apr 2020

Since 1988, the “slow start” mechanism designed by Van Jacobson has been used in most TCP-IP stacks. Slow start doubles the TCP congestion window every round trip. That works well for most connections, but not for satellite links as already explained in 1999 in RFC 2488. The time to reach link saturation varies as the base 2 logarithm of the ratio between initial window and saturation window multiplied by the round-trip time. For a high-speed satellite link, that can easily mean waiting many seconds before reaching nominal throughput, because of the 600 milliseconds RTT. The classic solution in TCP is to deploy performance enhancing proxies (PEP) in front of the satellite link. But QUIC is an encrypted transport, and that solution does not work. What would work is an enhanced algorithm that converges faster than the classic slow start. Can we build one? I think recent improvements to the “Picoquic” implementation QUIC point to an interesting solution.

The first enhancement to Picoquic was to implement BBR. At equilibrium, BBR sends packets at the data rate of the bottleneck. It relies on bandwidth estimates, and thus is way less sensitive to transmission errors than classic TCP or Cubic. This solve some of the problems mentioned by Kuhn et al. in their “QUIC for SATCOM” draft. But BBR does not solve the slow start problem. Bandwidth estimation requires sending a congestion window worth of packets, and then waiting for acknowledgements. This takes two round-trips, and for satellite that's too long. For long delay links, Picoquic replaces the start-up algorithm of BBR by a version of Hystart modified to include a peak bandwidth estimate.

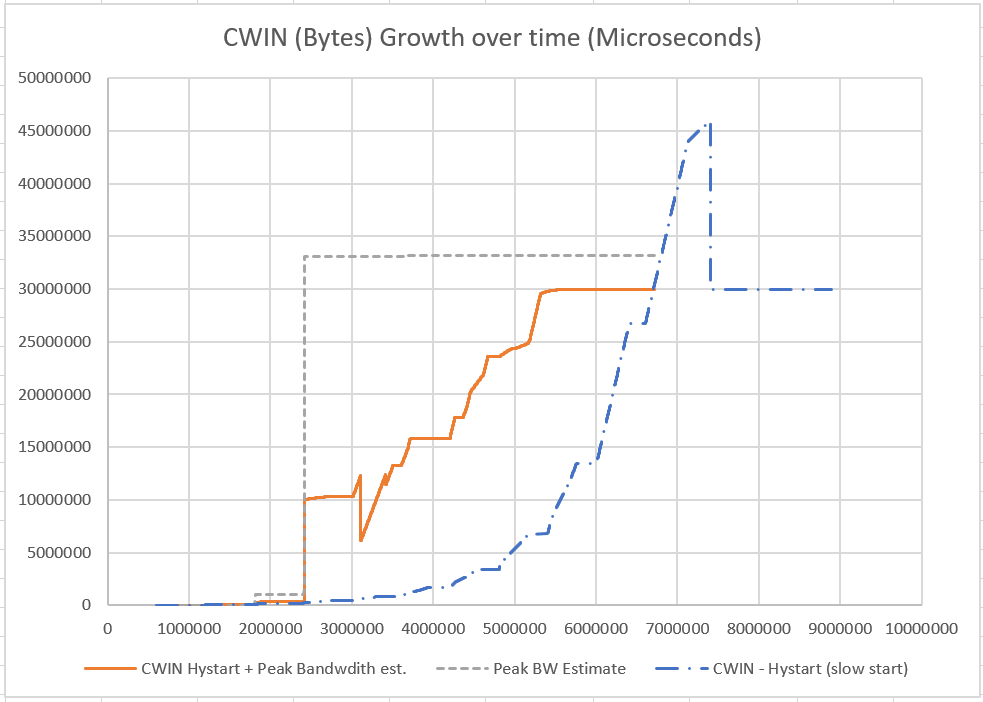

During the initial phase of the connection, Picoquic constantly measures the peak bandwidth by tweaking the packet pacing algorithm to send occasional bursts of data, and then monitoring the amount of data received in short intervals. Picoquic will then increase the congestion window if it appears smaller than the product of RTT by half the estimated peak bandwidth. The following graph shows the result for a 250 Mbps satellite connection.

As seen on the graph, the basic Hystart algorithm converges to the desired congestion window after 7.4 seconds, but the modified version converges after only 5.2 seconds. The test requires transmitting 100MB. This takes 8.9 seconds in the basic version, but only 6.7 seconds in the modified version. There is no packet loss observed in either version. These are encouraging results, although there is clear evidence that the modified version could still be improved. For example, the peak bandwidth is estimated after 2.4 seconds, so in theory there should be a way to converge in fewer than 5 seconds. But then, there is the difference between theory and practice.

In theory, bursts of data cannot be received faster than the bottleneck’s bandwidth. If the measuring interval includes the reception of a data burst, the average bandwidth measured during that interval will be higher than the pacing rate, but lower than the peak bandwidth. In practice, this is not quite true because the bandwidth measurement is imperfect. It is affected by transmission jitter and acknowledgement delays. These problems were encountered in practical deployments of algorithm like packet-pair or chirping: algorithms that appear to work well in simulations were found difficult to deploy in practice, as noted in a previous blog.

The two sources of peak bandwidth measurement errors are somewhat compensated in QUIC, but not completely. The acknowledgements in QUIC include an estimate of the acknowledgement delay. The time stamp extension eliminates the sensitivity to delay jitter on the return path. But even so, jitter on the send path can delay the reception of the first packet in a burst, or inversely cause a below average transmission delay of the last packet in a burst. This can lead to an overestimate of the peak bandwidth.

Picoquic attempts to reduce the error on the peak bandwidth estimate by using long bursts – 25 milliseconds if the RTT is larger than 100 milliseconds. In BBR, the bandwidth is estimated over a whole round-trip. The estimation errors on the peak bandwidth should be similar to the BBR estimation errors when the RTT is about 25 ms. We see some estimate error in the graph: the peak bandwidth is estimated at 264 Mbps (33MBs), which is larger than the actual bandwidth of 250 Mbps. The effect would probably be more pronounced if the simulation added some delay jitter on the path.

The current Picoquic design is conservative, comparing the current congestion window the only half the product of the RTT by the estimated peak bandwidth, instead of the full product. Overestimating the peak bandwidth could lead to overshooting, while underestimating by even 50% is easily corrected. That’s what we see in the graph: BBR quickly moves to the “probe Bandwidth” state, and the data rate increases from 133 Mbps to 250 Mbps in just over 2 seconds.

It is very likely that some tuning of the Picoquic software would further improve the results. The BBR implementation in Picoquic follows the specifications in an expired draft, and some parts look suboptimal. But getting an initial ramp up that works faster than slow start is a nice first step. It markedly improves performance on satellite links, and in fact on all long delay links included in the battery of Picoquic tests.

Update, April 21 2020:

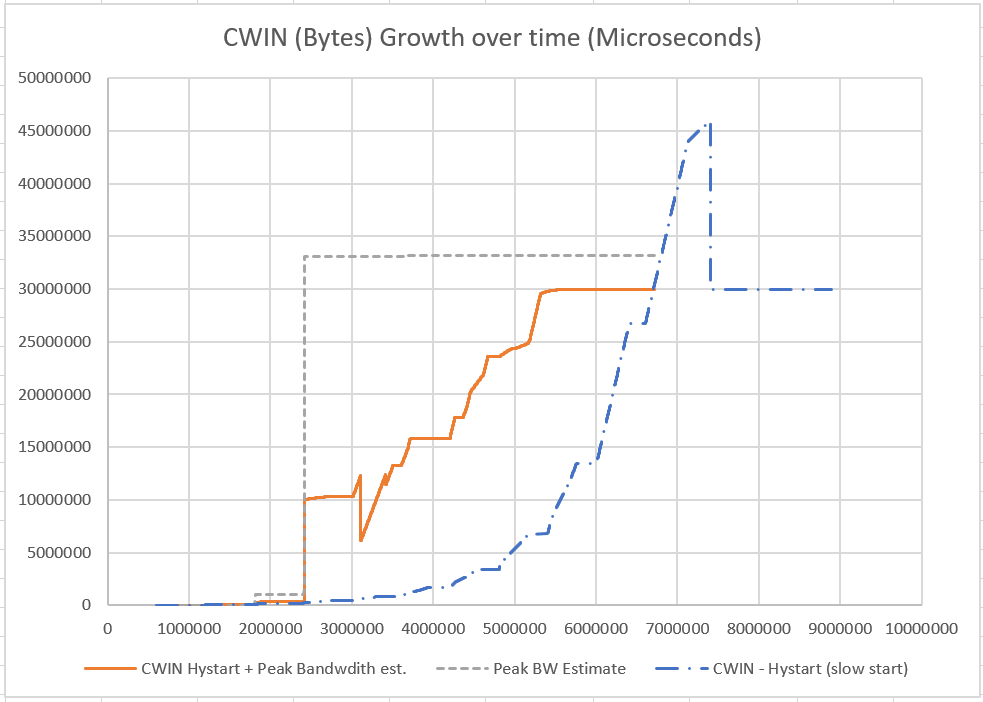

As mentioned above, some tuning was indeed required. In the graph above, we see that Picoquic exits the Hystart mode shortly after setting the target bandwidth to half the peak value, and switches to BBR's "probe bandwidth" mode, which is slower. This was due to a pub in pacing implementation, setting the leaky bucket to an excessively high value. After fixing that bug, we obtain a much better looking curve:

The peak bandwidth estimate does not change. The growth of CWIN with peak bandwidth estimate has more or less the same shape as the growth of basic Hystart, except much steeper. The CWIN value never exceeds twice the bandwidth delay product, and we do not observe packet losses. The 100MB transfer completes 6.3 seconds after the start of the connection, instead of 6.7 seconds before the pacing bug was fixed. The 6.3 seconds include one RTT for establishing the connection, and another for the acknowledgement of the data. The actual data transfer lasts 5.1 seconds, for an effective data rate of 155 Mbps, or 62% of the link capacity.

There are most probably other bugs to fix in the BBR implementation of Picoquic, but as mentioned above this is already encouraging.

Second Update, April 22, 2020:

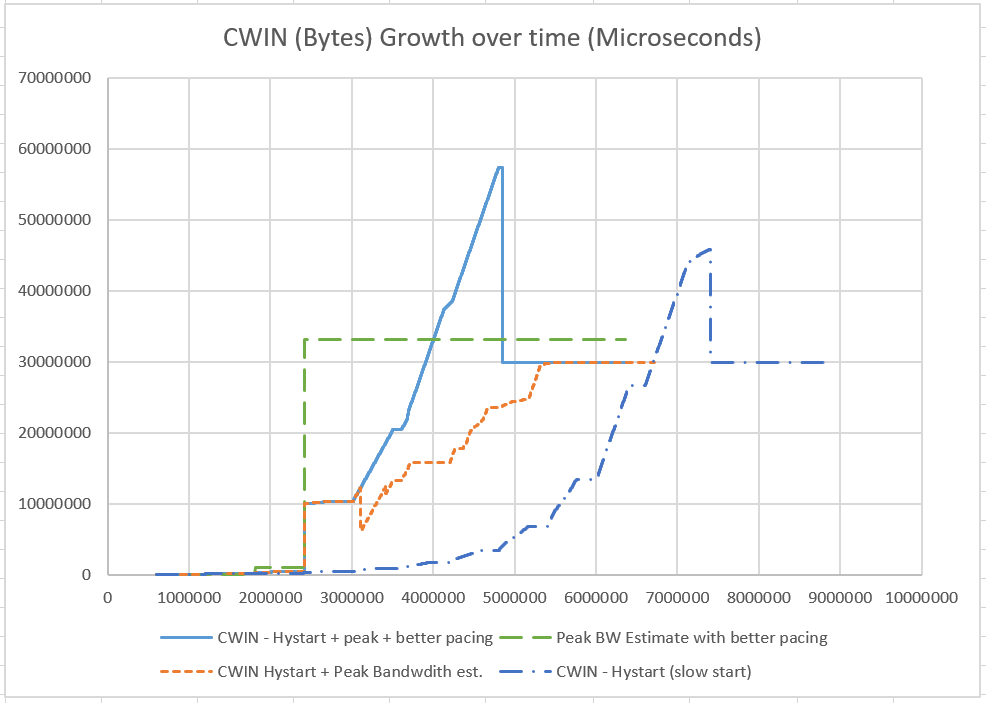

There was of course another bug to fix. The congestion control implementation was waiting for a first RTT measurement after the transfer started, instead of using the RTT measured during the connection handshake. That's important, because Picoquic uses a larger initial RTT for long delay links. Using that larger RTT sooner means that the peak bandwidth is also measured sooner, and we end up shortening the total time by 2 RTT, as seen in this updated graph:

The 100MB transfer now completes 5.2 seconds after the start of the connection, instead of 6.3 seconds before. The 5.2 seconds include one RTT for establishing the connection, and another for the acknowledgement of the data. The actual data transfer lasts 4 seconds, for an effective data rate of 200 Mbps, or 80% of the link capacity.